|

|

|

WebGPU..

Algorithms and Data Structures... |

|

|

|

| Understanding the WebGPU API and WebGPU Shading Language (WGSL) |  |

We dive into the algorithms and data structures best suited for modern GPU programming using the WebGPU API and WGSL. Rather than focusing on the basics of the API or shader language itself, it emphasizes practical techniques for efficient resource management, parallel computation, and memory optimization.

| Core Concepts in GPU Programming |  |

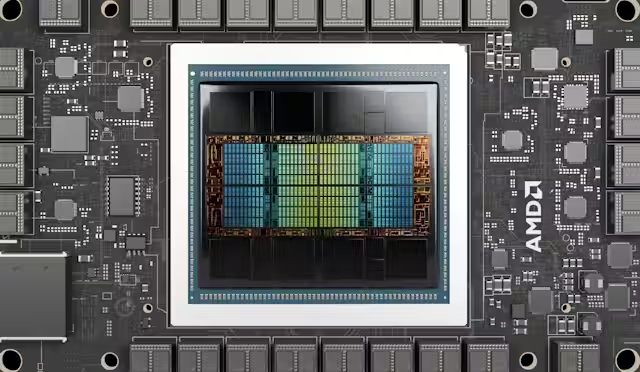

Let's start with the 'GPU'. GPU programming is fundamentally different from traditional CPU programming, demanding a distinct set of algorithms and data structures optimized for parallel execution and hardware constraints. The WebGPU API serves as a bridge to the GPU, providing a framework for managing resources, issuing rendering or compute commands, and controlling the flow of data between CPU and GPU. WGSL, the shading language associated with WebGPU, allows you to write programs that execute directly on the GPU, enabling highly parallelized tasks like rendering complex 3D scenes or performing large-scale numerical computations.

This section explains how the API and WGSL work together to facilitate efficient GPU programming. At its core, the API manages resources like buffers, textures, and shaders while enabling developers to define the "pipeline"—the sequence of operations the GPU performs. A key aspect of using the API effectively lies in resource management: understanding how to allocate buffers, structure data, and optimize memory transfers to avoid bottlenecks. For example, data destined for the GPU must be aligned according to the hardware's requirements, which may include padding or using specific memory layouts to maximize throughput.

On the GPU side, WGSL programs—also called shaders—are responsible for performing computations on data. WGSL introduces constructs like structs and arrays to organize input and output data, while its functions allow for intricate mathematical operations. Algorithms written in WGSL often focus on dividing tasks into smaller units that can be processed in parallel by thousands of GPU cores, which requires careful design to minimize contention and maximize the use of available processing power.

Together, the API and WGSL create a powerful system for building GPU-accelerated applications. By understanding how to efficiently use these tools, developers can create scalable solutions for real-time rendering, simulations, machine learning, and other high-performance tasks.

| Buffer Allocation and Management |  |

Efficient buffer allocation and management are fundamental to GPU programming, as buffers serve as the primary mechanism for transferring and storing data between the CPU and GPU. This section explores strategies for creating and maintaining buffers, focusing on minimizing latency, maximizing throughput, and adhering to alignment requirements.

Buffers in the WebGPU ecosystem are categorized by their intended use, such as vertex, index, uniform, or storage buffers. Each type has specific constraints and optimization opportunities. For instance, uniform buffers are used to pass small amounts of frequently accessed data, while storage buffers handle larger datasets that require read-write access on the GPU. Selecting the appropriate buffer type for your data ensures optimal resource utilization.

Strategies for Efficient Buffer Allocation

1. Minimizing Memory Fragmentation

Allocating buffers in large, contiguous memory blocks helps reduce fragmentation. Using a memory pooling system enables multiple resources to share the same allocation, simplifying resource management and improving cache performance.

2. Alignment and Padding

GPU hardware often imposes strict alignment requirements. For example, uniform buffer data must typically be aligned to 256 bytes. Padding unused memory regions ensures that alignment constraints are met, which prevents costly runtime errors and reduces access penalties.

3. Dynamic vs. Static Buffers

Choosing between dynamic and static buffers depends on how frequently the buffer contents are updated. Static buffers are ideal for immutable data, such as static geometry or precomputed textures, while dynamic buffers are suited for frequently updated content like animation data or particle simulations.

Updating Buffer Data

Efficiently updating buffer content is crucial for real-time applications. Strategies include:

• Mapping Buffers: The API allows mapping buffers to the CPU address space for direct data writes. This approach is useful for frequent updates but requires careful synchronization to avoid race conditions.

• Double Buffering: Using two buffers alternately ensures that one buffer is updated while the other is being used by the GPU, preventing stalls.

• Partial Updates: Instead of rewriting the entire buffer, updating only the affected regions reduces overhead and improves performance.

Buffer Lifetime Management

Managing the lifecycle of buffers is critical for avoiding memory leaks and ensuring efficient use of GPU resources. Strategies include:

• Reference Counting: Implementing reference counting ensures that buffers are deallocated only when no longer in use.

• Deferred Destruction: Resources marked for deletion are held until the GPU completes all tasks using them, preventing crashes or undefined behavior.

Mastering buffer allocation and management techniques, developers can ensure that their applications maximize GPU performance, reduce latency, and maintain scalability for increasingly complex workloads.

| Resource Binding and Descriptor Strategies |  |

Efficiently binding resources such as buffers, textures, and samplers to the GPU is a critical aspect of high-performance GPU programming. Resource binding in the WebGPU API is achieved through bind groups and descriptors, which define the layout and relationships of resources in GPU memory. This section focuses on strategies for organizing and managing these bindings to optimize performance and scalability.

The Role of Bind Groups

Bind groups act as containers for GPU resources, grouping related resources together and linking them to shaders. By designing bind groups effectively, developers can reduce the overhead associated with frequent binding changes and ensure efficient resource access during pipeline execution. Key strategies include:

• Grouping Related Resources: Consolidating resources that are accessed together within a single bind group minimizes binding updates and improves cache locality. For example, a uniform buffer containing transformation matrices and a sampler for texture data can be grouped together if both are used in the same shader.

• Minimizing Bind Group Changes: Frequent switching of bind groups can stall the GPU pipeline. Structuring data to require fewer switches improves rendering or compute performance.

Descriptor Layouts and Reusability

The descriptor layout specifies the structure of a bind group, defining the types and order of resources it contains. Creating flexible and reusable layouts is essential for scalable applications. Best practices include:

• Using Shared Descriptor Layouts: For complex applications, such as those rendering multiple objects or scenes, using consistent descriptor layouts across pipelines simplifies resource management.

• Adapting for Dynamic Resources: Some resources, like dynamic uniform buffers, require offsets to support multiple objects sharing the same buffer. Designing descriptor layouts with dynamic offsets allows efficient updates without redefining layouts.

Binding Strategies for Common Resource Types

1. Uniform Buffers:

Uniform buffers store frequently accessed small data, such as camera matrices or lighting parameters. Binding a single large uniform buffer with multiple object data packed into it can reduce the number of bindings and updates. Proper alignment and offsets within the buffer ensure smooth access.

2. Storage Buffers:

Storage buffers support larger datasets and read-write access. These are well-suited for algorithms like particle simulations or physics calculations. Segmenting storage buffers into regions allocated for specific workloads can reduce resource contention and improve GPU utilization.

3. Textures and Samplers:

Binding textures efficiently requires consideration of sampling frequency and access patterns. Combining textures into an array or atlas minimizes the number of texture bindings and allows shaders to access multiple textures through indexed lookups.

Dynamic Resource Binding

Dynamic bindings provide flexibility for applications with varying resource requirements at runtime. Strategies include:

• Indexing Resources Dynamically: Using dynamic indexing in shaders to access resources like textures or storage buffers avoids frequent bind group updates.

• Managing Dynamic Offsets: Leveraging dynamic offsets in bind groups enables multiple objects to share a single buffer, significantly reducing the memory footprint and update overhead.

1. Batching Resource Updates:

Updating multiple resources in a single operation reduces API overhead and ensures better utilization of GPU memory bandwidth.

2. Using Persistent Bind Groups:

Resources that do not change frequently, such as global lighting data, can be placed in persistent bind groups to avoid redundant bindings.

3. Profiling and Debugging:

Tools like frame capture and pipeline analysis can help identify bottlenecks related to resource binding. Iterative refinement ensures the most effective strategies are employed.

Understanding and applying these resource binding and descriptor strategies, developers can minimize GPU stalls, enhance resource access efficiency, and build scalable applications capable of handling complex rendering and compute tasks.

| Parallel Computation with GPU Pipelines |  |

Modern GPUs are designed to execute thousands of operations simultaneously, making them ideal for parallel computation tasks. Leveraging this capability requires an understanding of GPU pipelines and how to structure algorithms to exploit their parallel nature effectively. This section focuses on strategies for designing and implementing parallel algorithms using compute and rendering pipelines.

Understanding GPU Pipelines

GPU pipelines can be divided into two primary types: graphics pipelines, which handle rendering operations, and compute pipelines, which execute general-purpose computations. Compute pipelines are particularly well-suited for tasks like physics simulations, data analysis, and machine learning, as they provide fine-grained control over parallel processing.

Key characteristics of GPU pipelines include:

• Thread Grouping: Compute pipelines execute threads in groups, known as workgroups. Each workgroup processes a subset of the data, with its threads capable of sharing memory and synchronizing with one another.

• Memory Hierarchy: GPUs have a multi-tiered memory system, including global, shared, and private memory. Effective use of these tiers is crucial for minimizing latency and maximizing throughput.

Designing Parallel Algorithms

1. Data Partitioning:

Divide the data into independent chunks that can be processed concurrently by separate workgroups. For example, in a matrix multiplication algorithm, each workgroup can compute one block of the resulting matrix.

2. Load Balancing:

Ensure work is evenly distributed across all GPU cores. Uneven workloads lead to idle threads, wasting computational resources. Strategies like dynamic work distribution or fine-grained task partitioning can address this issue.

3. Synchronization and Dependencies:

Minimize inter-thread dependencies within and across workgroups. When synchronization is required, use GPU-specific constructs like memory barriers to coordinate threads efficiently.

Efficient Use of Workgroups and Threads

- Thread Block Size: Choose a thread block size that maximizes GPU occupancy while matching the hardware's warp or wavefront size. For many GPUs, optimal block sizes are powers of two, such as 32 or 64 threads per block.

- Shared Memory Utilization: Take advantage of shared memory within workgroups for intermediate calculations. For instance, in a reduction algorithm, threads in a workgroup can collaboratively compute partial sums using shared memory.

Optimizing Memory Access Patterns

Memory access patterns significantly affect performance. Strategies to optimize memory usage include:

• Coalesced Access: Arrange data in memory so that threads access contiguous addresses, minimizing latency and maximizing memory bandwidth.

• Avoiding Bank Conflicts: When using shared memory, ensure threads access different memory banks to avoid conflicts that serialize memory access.

• Caching Frequently Accessed Data: Use local or shared memory to store frequently accessed data, reducing reliance on slower global memory.

Real-World Applications

1. Image Processing:

Algorithms like convolution filters, edge detection, and Gaussian blurring can be parallelized by assigning each pixel or block of pixels to a thread.

2. Physics Simulations:

Parallelizing particle-based simulations (e.g., fluid dynamics or collision detection) allows real-time performance for complex systems.

3. Matrix Operations:

Tasks like matrix multiplication or solving linear equations benefit greatly from the parallel structure of GPU pipelines, with each thread computing a portion of the result.

Profiling and Debugging Parallel Code

Efficient parallel computation often requires profiling tools to identify bottlenecks in memory access, thread utilization, and synchronization. Tools like GPU profilers and frame debuggers can provide insights into performance issues, guiding optimization efforts.

Structuring algorithms to align with GPU pipeline architectures, developers can harness the full power of parallel computation, enabling high-performance solutions for complex, data-intensive tasks.

|

|